TL;DR:

Thread safety in C# ensures correct behavior when multiple threads access shared data, preventing race conditions and data corruption. Use synchronization tools likelock, Monitor, Interlocked, and thread-safe collections to control concurrent access. Choose the right approach for your scenario to build reliable, high-performance multithreaded applications.What is Thread Safety in C#?

Thread safety in C# means your code can be safely executed by multiple threads at the same time, without causing data corruption, race conditions, or unpredictable behavior. Thread safety is essential in modern, concurrent applications, such as web servers or responsive UIs where multiple threads often access shared resources.

Thread safety is achieved by using synchronization mechanisms (like lock, Monitor, or Interlocked) to control access to shared data, ensuring that only one thread can modify a resource at a time or that operations are performed atomically.

Why Does Thread Safety Matter?

Have you ever built a multi-threaded application and encountered strange bugs that appear randomly and are hard to reproduce? These are often symptoms of thread safety issues, one of the most challenging aspects of concurrent programming in C#.

Real-World Analogy

Imagine threads as people and a shared variable as a notepad. If two people read a number from the same notepad, both add one to it in their minds, and then write their results back, you might end up with the wrong value because they didn’t coordinate their actions. This is a classic example of a race condition.

In summary: Thread safety ensures your C# code works correctly and predictably, even when accessed by multiple threads at once. Without it, you risk data loss, corruption, and hard-to-find bugs.

Why should you care about thread safety? Because modern applications are increasingly concurrent. From web servers handling multiple requests to responsive UIs that don’t freeze during background operations, multi-threading is everywhere. But with this power comes responsibility, you need to properly manage how threads access shared resources.

The good news is that C# offers several effective mechanisms to achieve thread safety. Some approaches involve synchronizing access to shared data (like locks and monitors), while others avoid sharing mutable data altogether (through immutable objects or thread-local storage).

I’ve spent years debugging concurrency issues, and I’ve learned that understanding thread safety isn’t just theoretical, it’s essential for building reliable applications that scale.

Understanding Race Conditions

To understand why thread safety is important, consider this example of a race condition:

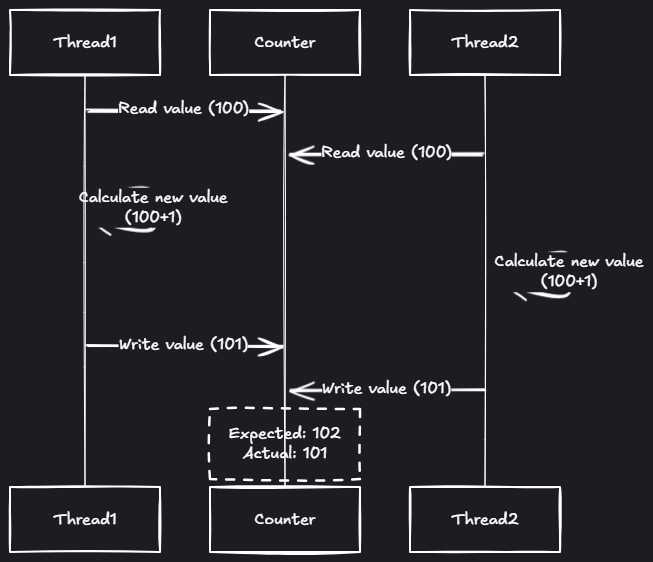

Race Condition Example: Two Threads Reading and Writing to a Shared Counter

In this scenario, two threads read a counter value simultaneously, increment it locally, and write it back. Instead of getting 102, we end up with 101 because the second thread overwrites the first thread’s update.

This is a classic race condition that thread safety mechanisms are designed to prevent.

There are several ways to achieve thread safety in C#:

C# provides several powerful mechanisms to achieve thread safety, each with its own strengths:

Synchronization mechanisms: Using the lock keyword is the most common approach, which prevents multiple threads from executing a critical section of code simultaneously. For more advanced scenarios, you might use the Monitor class which offers the same functionality with more control.

Atomic operations: The Interlocked class provides simple operations like increment, decrement, and exchange that execute without interruption from other threads.

Memory visibility: The volatile keyword ensures that threads always see the latest value of a variable rather than a cached copy, which is crucial when one thread signals another.

Thread isolation: With the ThreadStatic attribute, each thread gets its own copy of a static field, completely avoiding shared state.

Resource limiting: Semaphore and SemaphoreSlim let you control exactly how many threads can simultaneously access a resource or section of code.

Signaling: The ManualResetEvent and AutoResetEvent classes provide mechanisms for threads to signal each other when operations complete or conditions change.

Reader-writer patterns: The ReaderWriterLockSlim class optimizes scenarios where many threads read data but only occasionally write to it, allowing concurrent reads while ensuring exclusive write access.

Each of these tools solves different threading problems. Let’s examine them one by one.

It is important to choose the appropriate synchronization mechanism based on the specific needs of your program.

lock

Using locks is a common way to achieve thread safety in C#. When a thread acquires a lock on an object, it prevents other threads from entering the critical section of code until the lock is released.

This ensures that only one thread can access the shared resource at a time, which helps to prevent race conditions and other problems.

To use locks in C#, you can use the lock keyword to acquire a mutual-exclusion lock on an object. The syntax for using locks is as follows:

lock (object)

{

// Critical section of code goes here

// Only one thread can execute this code at a time

}

The object in the lock statement can be any reference type. It is typically a private object field that is used as the lock object. For example:

private readonly object _lock = new object();

public void ProcessData()

{

lock (_lock)

{

// Critical section of code goes here

// Only one thread can execute this code at a time

}

}

It is important to ensure that the lock is released as soon as possible to avoid blocking other threads unnecessarily. It is also a good idea to minimize the amount of code in the critical section to reduce the risk of deadlocks.

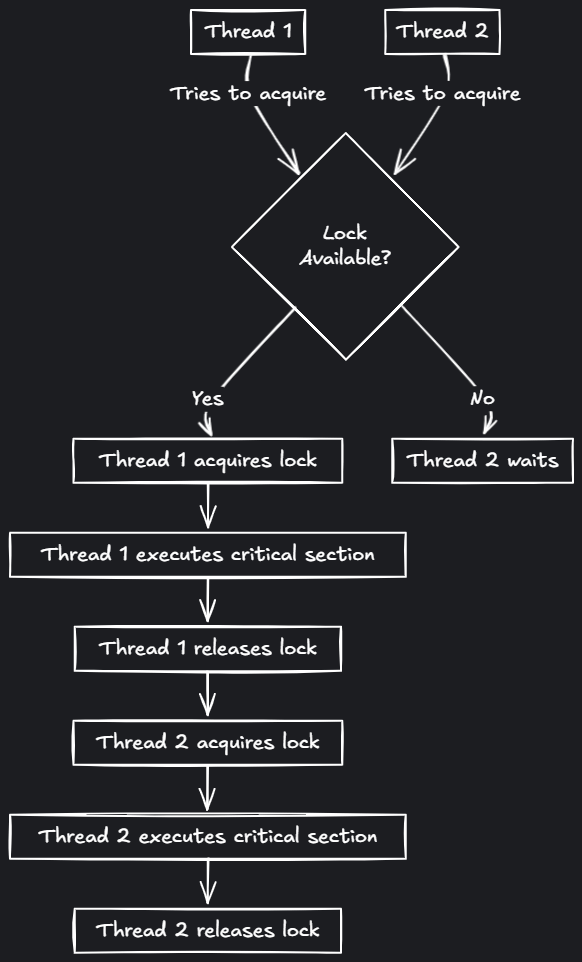

The following diagram illustrates how lock acquisition works when multiple threads try to access a critical section:

Lock Acquisition Flow: How Multiple Threads Coordinate Access to Critical Sections

Interlocked

The System.Threading.Interlocked class provides atomic operations for common arithmetic and bitwise operations in C#.

These operations can be useful for updating variables that are shared between multiple threads in a thread-safe manner.

Here are some examples of using the Interlocked class:

- Incrementing or decrementing a variable:

private int _counter;

public void IncrementCounter()

{

Interlocked.Increment(ref _counter);

}

public void DecrementCounter()

{

Interlocked.Decrement(ref _counter);

}

- Adding or subtracting a value from a variable:

private int _total;

public void AddToTotal(int value)

{

Interlocked.Add(ref _total, value);

}

public void SubtractFromTotal(int value)

{

Interlocked.Add(ref _total, -value);

}

- Comparing and exchanging values:

private int _value;

public void SetValue(int newValue)

{

Interlocked.CompareExchange(ref _value, newValue, _value);

}

The Interlocked class provides several other atomic operations, such as reading and writing 64-bit integers and performing bitwise AND, OR, and XOR operations.

It is important to note that the Interlocked class only provides atomic operations for certain types and operations.

If you need to perform more complex atomic operations or need to synchronize access to more than one variable, you may need to use other synchronization mechanisms, such as locks or the Volatile keyword.

volatile

The Volatile keyword in C# can be used to declare a field as volatile, which means that it can be modified by multiple threads concurrently.

The compiler and runtime will ensure that writes to volatile fields are visible to all threads and that reads of volatile fields are not cached.

This can be useful for ensuring that the most up-to-date value of a field is always visible to all threads.

To declare a field as volatile, you can use the Volatile keyword in the field declaration. For example:

private volatile bool _stop;

public void Stop()

{

_stop = true;

}

You can also use the Volatile.Read and Volatile.Write methods to read and write volatile fields. For example:

private volatile int _value;

public int GetValue()

{

return Volatile.Read(ref _value);

}

public void SetValue(int newValue)

{

Volatile.Write(ref _value, newValue);

}

It is important to note that the

Volatilekeyword is not a replacement forlocksor other synchronization mechanisms. It is intended for use in situations where you need to ensure that the most up-to-date value of a field is visible to all threads, but do not need to synchronize access to the field itself.

ThreadStatic

The ThreadStatic attribute in C# can be used to declare a field as thread-static, which means that each thread will have its own copy of the field.

This can be useful for avoiding race conditions when multiple threads need to access a shared field.

To declare a field as thread-static, you can use the ThreadStatic attribute in the field declaration. For example:

[ThreadStatic]

private static int _threadId;

public void PrintThreadId()

{

Console.WriteLine(_threadId);

}

The value of a thread-static field is initialized to the default value for the field’s type when a thread first accesses the field. You can also specify an initial value for the field by assigning it a value in the declaration.

It is important to note that the ThreadStatic attribute can only be applied to static fields.

If you need to store thread-specific data for non-static fields, you can use the ThreadLocal class instead.

System.Threading.Monitor

The System.Threading.Monitor class provides methods for acquiring and releasing locks on objects and for waiting for and pulsing threads.

It can be useful for advanced synchronization scenarios where you need more control over the locking behavior of your program.

Here are some examples of using the Monitor class:

Acquiring and releasing a lock:

private readonly object _lock = new object();

public void ProcessData()

{

Monitor.Enter(_lock);

try

{

// Critical section of code goes here

// Only one thread can execute this code at a time

}

finally

{

Monitor.Exit(_lock);

}

}

Waiting for and pulsing a thread:

private readonly object _lock = new object();

private bool _dataReady;

public void WaitForData()

{

Monitor.Enter(_lock);

try

{

while (!_dataReady)

{

Monitor.Wait(_lock);

}

}

finally

{

Monitor.Exit(_lock);

}

}

public void SetDataReady()

{

Monitor.Enter(_lock);

try

{

_dataReady = true;

Monitor.Pulse(_lock);

}

finally

{

Monitor.Exit(_lock);

}

}

The Monitor class also provides several other methods, such as TryEnter, PulseAll, and WaitTimeout, that can be useful in different synchronization scenarios.

System.Threading.Semaphore

The System.Threading.Semaphore class in C# provides a synchronization mechanism that allows a certain number of threads to access a resource or a section of code concurrently.

It can be useful for limiting the number of threads that can access a resource or for ensuring that a certain number of threads are available to perform a task.

Here is an example of using a semaphore to limit the number of threads that can access a resource:

private static readonly SemaphoreSlim _semaphore = new SemaphoreSlim(3);

public void AccessResource()

{

_semaphore.Wait();

try

{

// Access the resource here

// Only 3 threads can execute this code at a time

}

finally

{

_semaphore.Release();

}

}

The SemaphoreSlim class is a lightweight version of the Semaphore class that is optimized for use with small numbers of threads.

If you need to synchronize access to a resource that is shared by a large number of threads, you can use the Semaphore class instead.

The Semaphore class also provides several other methods, such as WaitAsync, Release, and ReleaseAsync, that can be useful in different synchronization scenarios.

System.Threading.ManualResetEvent and System.Threading.AutoResetEvent

The System.Threading.ManualResetEvent and System.Threading.AutoResetEvent classes in C# provide synchronization mechanisms that allow threads to signal each other and to wait for signals. They can be useful for coordinating the behavior of multiple threads.

Here is an example of using a manual reset event to signal a thread:

private static readonly ManualResetEvent _event = new ManualResetEvent(false);

public void WaitForSignal()

{

_event.WaitOne();

// Continue execution when the event is signaled

}

public void Signal()

{

_event.Set();

}

The ManualResetEvent class remains signaled until it is explicitly reset using the Reset method. This allows multiple threads to wait for the event and continue execution when it is signaled.

The AutoResetEvent class is similar to the ManualResetEvent class, but it automatically resets itself to the unsignaled state after a single waiting thread has been released. This allows only one thread to wait for the event and continue execution when it is signaled.

Here is an example of using an auto reset event:

private static readonly AutoResetEvent _event = new AutoResetEvent(false);

public void WaitForSignal()

{

_event.WaitOne();

// Continue execution when the event is signaled

}

public void Signal()

{

_event.Set();

}

The ManualResetEvent and AutoResetEvent classes also provide several other methods, such as WaitOneAsync and SetAsync, that can be useful in different synchronization scenarios.

System.Threading.ReaderWriterLock

The System.Threading.ReaderWriterLock class in C# provides a synchronization mechanism that allows multiple threads to read from a resource concurrently, but allows only one thread to write to the resource at a time. It can be useful for synchronizing access to resources that are frequently read but infrequently written.

Here is an example of using a reader-writer lock to synchronize access to a resource:

private readonly ReaderWriterLockSlim _lock = new ReaderWriterLockSlim();

public void ReadFromResource()

{

_lock.EnterReadLock();

try

{

// Read from the resource here

// Multiple threads can execute this code at a time

}

finally

{

_lock.ExitReadLock();

}

}

public void WriteToResource()

{

_lock.EnterWriteLock();

try

{

// Write to the resource here

// Only one thread can execute this code at a time

}

finally

{

_lock.ExitWriteLock();

}

}

The ReaderWriterLockSlim class is a lightweight version of the ReaderWriterLock class that is optimized for use with small numbers of threads. If you need to synchronize access to a resource that is shared by a large number of threads, you can use the ReaderWriterLock class instead.

The ReaderWriterLock class also provides several other methods, such as TryEnterReadLock, TryEnterWriteLock, and UpgradeToWriteLock, that can be useful in different synchronization scenarios.

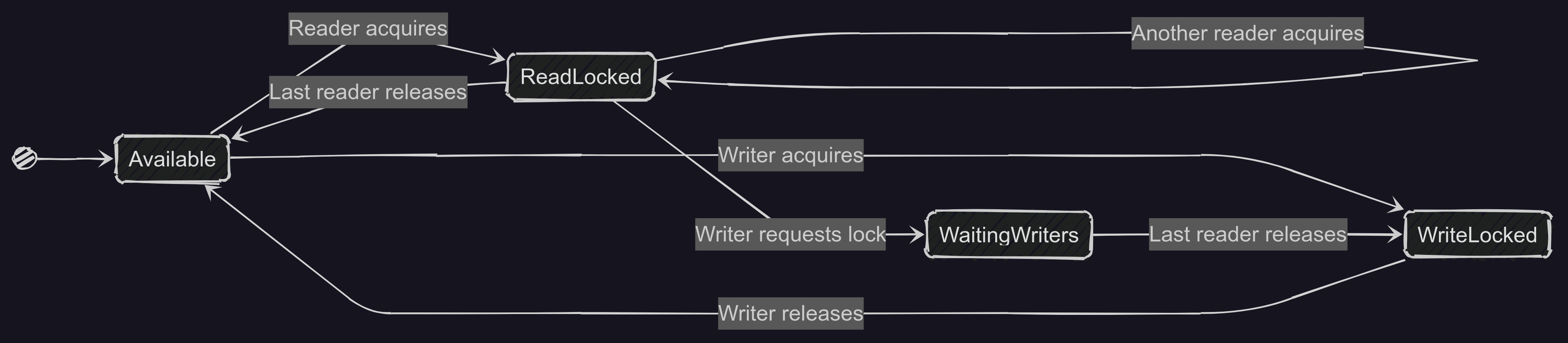

The following state diagram illustrates how a ReaderWriterLock transitions between different states:

ReaderWriterLock State Transitions: Managing Concurrent Reads and Exclusive Writes

System.Threading.Barrier

The System.Threading.Barrier class in C# provides a synchronization mechanism that allows multiple threads to coordinate their execution at a predetermined point, known as a “barrier.” It can be useful for ensuring that multiple threads perform a task in parallel, but complete it at the same time.

Here is an example of using a barrier to synchronize the execution of multiple threads:

private static readonly Barrier _barrier = new Barrier(2, b =>

{

Console.WriteLine("All threads have reached the barrier");

});

public void PerformTask()

{

Console.WriteLine("Thread {0} has started the task", Thread.CurrentThread.ManagedThreadId);

_barrier.SignalAndWait();

Console.WriteLine("Thread {0} has completed the task", Thread.CurrentThread.ManagedThreadId);

}

In this example, the barrier is initialized with a participant count of 2, which means that it will wait for 2 threads to reach the barrier before continuing execution.

When the second thread reaches the barrier, the barrier’s Action delegate will be executed, which writes a message to the console.

The Barrier class also provides several other methods, such as AddParticipants, RemoveParticipants, and SignalAndWaitAsync, that can be useful in different synchronization scenarios.

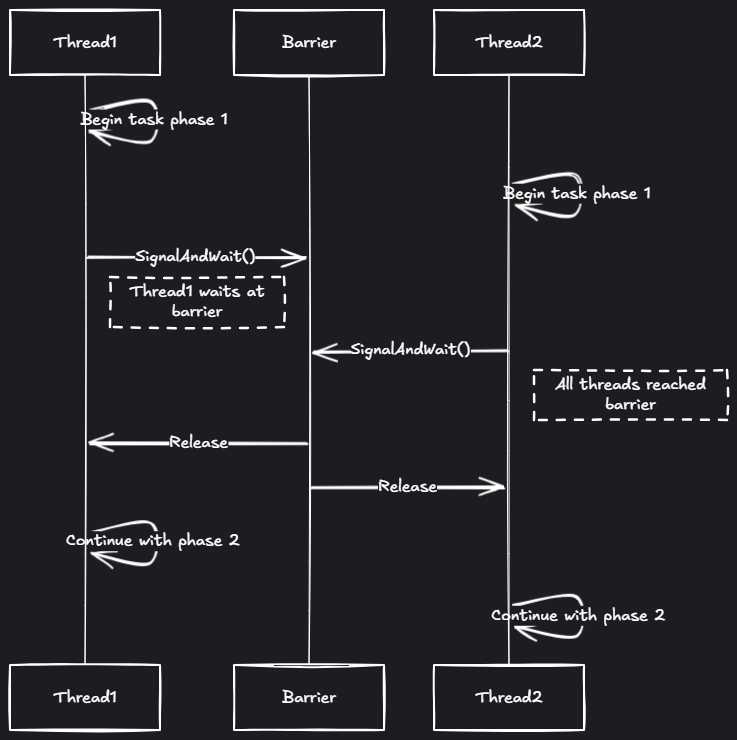

Here’s a sequence diagram showing how the Barrier works with multiple threads:

Barrier Synchronization: Multiple Threads Coordinating at a Common Point Before Proceeding

System.Threading.CountdownEvent

The System.Threading.CountdownEvent class in C# provides a synchronization mechanism that allows a thread to wait for a countdown to reach zero. It can be useful for coordinating the behavior of multiple threads that perform a task in parallel.

Here is an example of using a countdown event to synchronize the execution of multiple threads:

private static readonly CountdownEvent _countdown = new CountdownEvent(2);

public void PerformTask()

{

Console.WriteLine("Thread {0} has started the task", Thread.CurrentThread.ManagedThreadId);

_countdown.Signal();

Console.WriteLine("Thread {0} has completed the task", Thread.CurrentThread.ManagedThreadId);

}

public void WaitForTasks()

{

_countdown.Wait();

Console.WriteLine("All tasks have completed");

}

In this example, the countdown event is initialized with a count of 2, which means that it will wait for 2 signals before continuing execution. When the second thread calls the Signal method, the countdown will reach zero and the waiting thread will continue execution.

The CountdownEvent class also provides several other methods, such as AddCount, TryAddCount, and WaitAsync, that can be useful in different synchronization scenarios.

Real-World Example: Building a Thread-Safe Cache

Let’s create a practical example that demonstrates thread safety concerns in a common scenario: a simple caching system. Caches are widely used to improve performance, but in multi-threaded environments, they require careful synchronization.

Here’s how we might implement a thread-safe cache:

using System;

using System.Collections.Generic;

using System.Threading;

public class ThreadSafeCache<TKey, TValue>

{

private readonly Dictionary<TKey, TValue> _cache = new Dictionary<TKey, TValue>();

private readonly ReaderWriterLockSlim _cacheLock = new ReaderWriterLockSlim();

private readonly Func<TKey, TValue> _valueFactory;

public ThreadSafeCache(Func<TKey, TValue> valueFactory)

{

_valueFactory = valueFactory ?? throw new ArgumentNullException(nameof(valueFactory));

}

public TValue GetOrAdd(TKey key)

{

// First try with a read lock (optimistic)

_cacheLock.EnterReadLock();

try

{

if (_cache.TryGetValue(key, out TValue value))

{

return value;

}

}

finally

{

_cacheLock.ExitReadLock();

}

// If value wasn't found, upgrade to write lock

_cacheLock.EnterWriteLock();

try

{

// Double-check in case another thread added the value

// while we were waiting for the write lock

if (_cache.TryGetValue(key, out TValue value))

{

return value;

}

// Value not in cache, create it and add to cache

value = _valueFactory(key);

_cache.Add(key, value);

return value;

}

finally

{

_cacheLock.ExitWriteLock();

}

}

public bool TryGetValue(TKey key, out TValue value)

{

_cacheLock.EnterReadLock();

try

{

return _cache.TryGetValue(key, out value);

}

finally

{

_cacheLock.ExitReadLock();

}

}

public void Clear()

{

_cacheLock.EnterWriteLock();

try

{

_cache.Clear();

}

finally

{

_cacheLock.ExitWriteLock();

}

}

public int Count

{

get

{

_cacheLock.EnterReadLock();

try

{

return _cache.Count;

}

finally

{

_cacheLock.ExitReadLock();

}

}

}

}

This cache implementation demonstrates several thread safety principles:

- It uses

ReaderWriterLockSlimto allow concurrent reads while ensuring exclusive access during writes - It implements double-checking to avoid redundant work when multiple threads try to add the same key simultaneously

- All operations that access the internal dictionary are properly synchronized

Here’s how you might use this thread-safe cache in a real application:

// Creating a thread-safe cache for expensive database operations

var userCache = new ThreadSafeCache<int, UserData>(userId =>

{

Console.WriteLine($"Fetching user {userId} from database...");

// In a real app, this would hit a database

Thread.Sleep(500); // Simulate network/DB delay

return new UserData { Id = userId, Name = $"User {userId}" };

});

// Parallel operations using the cache

Parallel.For(0, 10, i =>

{

// Multiple threads can safely use the cache concurrently

var user = userCache.GetOrAdd(i % 3); // We use mod to generate cache hits

Console.WriteLine($"Thread {Thread.CurrentThread.ManagedThreadId} got user {user.Id}");

});

Console.WriteLine($"Cache now contains {userCache.Count} items");

public class UserData

{

public int Id { get; set; }

public string Name { get; set; }

}

This example demonstrates how thread safety mechanisms can be combined to build a practical, performance-oriented component that safely manages concurrent access to shared resources.

Comparison of Thread Safety Mechanisms

Different synchronization techniques are suited for different scenarios. Here’s a quick comparison:

| Mechanism | Best for | Performance Impact | Ease of Use |

|---|---|---|---|

| lock | General purpose synchronization | Moderate | Simple |

| Interlocked | Simple atomic operations | Low | Simple |

| ReaderWriterLock | Read-heavy scenarios | Low for reads, moderate for writes | Moderate |

| Immutability | When shared state doesn’t change | Low | Advanced |

| ThreadStatic | Thread-isolated state | Very low | Simple |

| Semaphore | Limiting concurrent access | Moderate | Moderate |

The right choice depends on your specific requirements, but understanding these options gives you a powerful toolbox for writing thread-safe code.

Frequently Asked Questions

What does thread safety mean in C#?

lock or atomic operations like those in Interlocked.What’s the difference between a lock and Monitor in C#?

lock keyword in C# is a shorthand for Monitor.Enter and Monitor.Exit wrapped in a try/finally block. Monitor provides more advanced capabilities, such as Monitor.Wait(), Monitor.Pulse(), and Monitor.PulseAll() for thread coordination. It also allows timeouts and conditional synchronization, giving you finer control than lock.When should I use Interlocked vs. lock in C#?

Interlocked for simple atomic operations on numeric values (like Increment, Decrement, Exchange, Add) when performance is critical. Use lock when protecting a larger block of code or more complex operations. Interlocked is faster because it uses low-level CPU atomic instructions without the overhead of acquiring and releasing a lock.What are some common thread safety issues in C# applications?

How do concurrent collections help with thread safety?

System.Collections.Concurrent are specifically designed for multi-threaded access without external locking. They use fine-grained locking or lock-free algorithms internally to provide thread-safe operations. Examples include ConcurrentDictionary, ConcurrentQueue, ConcurrentBag, and BlockingCollection, which offer better scalability than manually synchronized regular collections.Is the async/await pattern thread-safe by default?

async/await doesn’t automatically make your code thread-safe. While it simplifies asynchronous programming, it doesn’t address shared state problems. If multiple async tasks access the same data, you still need synchronization mechanisms. Additionally, continuation of async methods may run on different threads, potentially creating race conditions when accessing shared resources.See other c-sharp posts

- C# Abstract Classes Explained: Practical Examples, Patterns, and Best Practices

- Abstract Class vs Interface in C#: with Real-World Examples, and When to Use Them

- C# Access Modifiers Explained: Complete Guide with Examples & Best Practices

- C# 14’s Alias Any Type: A Game-Changer for Code Readability?

- Array vs ArrayList in C#: Key Differences, Performance, and When to Use Each[+Examples]

- 5 Essential Benefits of Immutability in C# Programming

- Constructor Chaining in C#: Techniques and Best Practices

- C# Default Interface Methods vs Abstract Methods: Differences, Use Cases, and Best Practices

- Understanding Delegates vs Events in C#: When and How to Use Each

- Dictionary vs Hashtable in C#: Performance, Type Safety & When to Use Each

- Why Exposing Behavior Is Better Than Exposing Data in C#: Best Practices Explained

- C# Extension Methods: Add Functionality Without Inheritance or Wrappers

- High-Volume File Processing in C#: Efficient Patterns for Handling Thousands of Files

- Immutability vs Mutability in C#: Understanding the Differences

- Interface in C#: Contracts, Decoupling, Dependency Injection, Real-World Examples, and Best Practices

- C# Abstract Class vs Interface: 10 Real-World Questions You Should Ask

- Lambda Expressions in C#: How and When to Use Them [Practical Examples]

- Method Overloading vs Overriding in C#: Key Differences, and Examples

- C# Nullable Reference Types: How, When, and Why to Use or Disable Them

- C# 14’s params for Collections: Say Goodbye to Arrays!

- Primary Constructors in C# 12: Simplified Class Design for Classes, Structs, and Records

- Handling Cancellation in ASP.NET Core: From Browser to Database

- What Are the Risks of Exposing Public Fields or Collections in C#?

- Static Classes vs Singleton Pattern in C#: Pros, Cons, and Real-World Examples

- Task vs ValueTask in C#: Making the Right Choice for Performance

- Tuples vs Custom Types in C#: Clean Code or Lazy Hack?

- Abstract Classes in C#: When and How to Use Them Effectively [+Examples]

- C# Data Annotations: Complete Guide with Examples, Validation, and Best Practices

- C# Generics: A Complete Guide to Type-Safe, Reusable Code [With Examples]

- What is Boxing and Unboxing in C#?

- Understanding Deadlocks in C#: Causes, Examples, and Prevention

- When to Use Static Classes in C#: Best Practices and Use Cases

- Why Private Fields Matter in C#: Protect Your Object’s Internal State